Although search engines show a preference for authority websites and popular brands when it comes to ranking, technical SEO offers an affordable way to help smaller companies and upcoming brands stand out from the crowd.

If you handle technical SEO the right way, you may get a spot on the coveted first page of search engine result pages for specific keywords that you are targeting.

That is to say, you have to come up with an ideal technical SEO strategy to outdo renowned brands and websites in your niche.

That’s why we have compiled a list of technical SEO best practices to help you prepare your site for Technical SEO. Keep reading to learn more.

Technical SEO Best Practices to Improve Your Site for Ranking

1. Ensure all resources on your site are crawlable.

It doesn’t matter how resourceful and helpful the content on your web pages is. If it cannot be crawled and indexed, it will remain hidden from your target audience. That’s why you should begin your technical SEO audit by checking your robots.txt file.

When search engine bots land on your website, the first thing they crawl is the robots.txt file. This file outlines the parts of your site that should be crawled and those that should not be crawled. It does this by highlighting ‘allow’ and ‘disallow’ on different links.

The robots.txt can be accessed by anyone and can be found by adding /robots.txt to the end of a root domain.

By indicating the pages you don’t want search engine bots to crawl, you will be saving server resources, bandwidth, and your assigned crawl budget (more on this later). You also do not want search engine bots to skip crawling important pages of your site by accidentally labelling them ‘disallow.’

You should, therefore, check your robot.txt file to ensure all the important pages of your site are ‘crawlable’ for purposes of ranking on search engines. Also, you will learn areas of crawl budget waste, pages you have prioritized for crawling, and if the bots encountered any problems when crawling your website.

2. Check for indexing

After ensuring all the important pages are ‘crawlable,’ you need to know if they are being indexed. Yes, it is possible for search engine bots to crawl your pages and fail to index them. There are several ways to check for indexing, including using Google Search Console, whereby you look at the status of each page on your site on the coverage report.

In this report, you can see things like pages that have been successfully indexed (valid), pages that indexed but have warnings on them, pages that have errors, and those excluded from being indexed and why.

You can also check for indexing by running a crawl using reliable crawling software such as Screaming Frog. The free version of this software can crawl up to 500 URLs. If you want more, you can get the premium version of the software. The software will reveal URLs that are ‘indexable’ and those that are non-indexable, including the reason for this. Non-indexed pages cannot appear in SERPs and thus cannot be ranked.

Another easier way of checking the number of pages that have been indexed is by using Domain Google Search Parameter. In the Google search bar, enter your domain in this format “site: yourdomain” and hit enter. You will see every page on your site that has been indexed by search engines. If you see a big difference between the number of pages you think your site has and those that have been indexed, then you can look further. If they are more than your estimation, you need to check for duplicate pages and ensure they are canonicalized. If less, it means most of your web pages are not indexed.

By using these three techniques, you can have a clear picture of how Google has indexed your site, and thus be able to make changes accordingly.

3. Optimize your crawl budget

Google has a crawl budget for every website based on several unknown criteria. By crawl budget, it means the number of web pages that search engines can crawl at a given time. You can see your crawl budget in Google Search Console. Unfortunately, Google Search Console will not reveal a breakdown of the crawl stats.

After knowing your crawl budget, you have to take measures to optimize it. It is said that Google assigns crawl budget sites based on the number of interlinks to a page and the number of backlinks a page has.

You can optimize your crawl budget by getting rid of duplicate pages you can do away with. Search engines still crawl your duplicate pages, and this wastes your crawl budget. You can also prevent the indexation of pages that have no SEO value, including terms and conditions pages, privacy policy pages, etc. Add this to the ‘Disallow’ list in the robots.txt file.

Another way of optimizing your crawl budget is by fixing broken links since Google will still crawl them.

Doing this reserves more budget for pages you want Google to Index. Keep in mind that once you exhaust your crawl budget, your pages will have to be indexed at a later date, and this will delay their ranking.

4. Review your sitemap

One of the most important technical SEO best practices you should not ignore is having a structured and comprehensive sitemap. Your XML sitemap is a map used by search engine crawlers to crawl and rank web pages.

Here are the things to do to optimize your sitemap.

- Your sitemap should be in an XML document.

- It should only include canonical versions of your URLs.

- Should not include non-indexable URLs.

- Should follow XML sitemap protocol.

- Should be updated with new pages you create.

If you have the Yoast SEO plugin, you can have it create an XML sitemap for you. Ensure your sitemap has all your most important pages and doesn’t have your non-indexable pages. After updating your sitemap, always re-submit it to your Google Search Console.

5. Take Google’s mobile-friendliness test

Google announced in 2018 that it will now use mobile versions of web pages for indexing and ranking. This meant that instead of using desktop versions of pages for indexing, they will start prioritizing mobile versions of your page. This was for the sole reason of keeping up with the majority of users who are now using mobile phones.

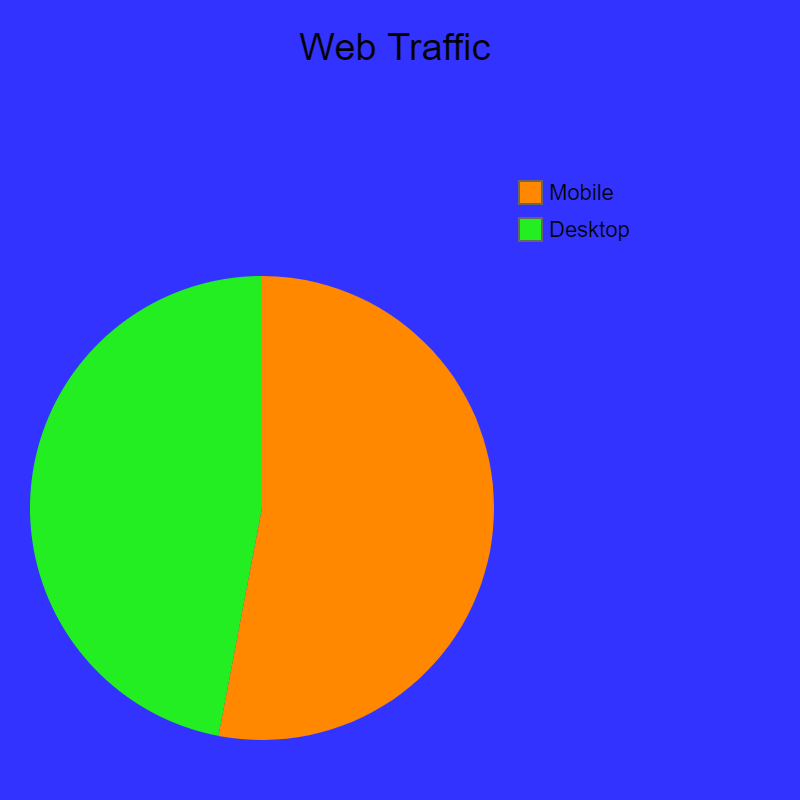

With over 52% of global internet traffic coming from mobile devices, it is vital to ensure your website is mobile-friendly.

You can use the free Google’s Mobile-Friendly Test platform to check if your pages are mobile responsive. You just need to input your domain, and it will tell you if your page is rendered for mobile.

You can also check your webpage using your phone and navigate across different pages to see if it is mobile-friendly.

If you are yet to make your pages mobile-friendly, then you should do it immediately. Don’t miss out on mobile traffic and better ranking by Google.

6. Check your site’s internal links

Having a shallow, logical site structure is an indicator of great user experience. With site structure, we mean how web pages are lined up. A good site structure helps to spread ranking power, popularly known as PageRank, around pages efficiently.

Here are the things to look out for to keep your site structure logical.

i. Click depth

You should keep your site structure shallow, with your important pages being no more than three clicks from your homepage

ii. Redirected links

You should limit the number of redirects to a page, regardless of whether or not a visitor will eventually get themselves on the right page. Many redirects waste your crawl budget and lower a page’s load time. Therefore, look for pages with more than three redirects and update the links to the redirected pages

iii. Broken links

Broken links not only eat up your crawl budget and ranking power but also confuse your visitors. Ensure to reduce the number of broken links on your site as much as possible

iv. Orphan pages

These are pages that are not linked to any other page on your site. They are, therefore, hard to get discovered by search engines and your visitors. Ensure that all your web pages are interconnected based on their category

7. Test for page speed

Page speed is now used as one of the ranking factors by Google. Having a website that is fast, mobile-responsive, and user-friendly is now mandatory if you want to rank high in SERPs.

You can test and assess your site’s speed using different tools. The most accessible tool is the PageSpeed Insight tool offered by Google.

If your page doesn’t pass some of the standards of the tests set by this tool, Google provides details on how to fix the issues that are making your pages lag. You will get a downlink that has compressed versions of the images that Google considers too heavy.

Other things you can check include the server/hosting you are using as well the number of plugins on your site.

Bottom Line

The above strategies we have discussed are the foundation of technical SEO any website owner should work on to ensure they are in order.

These best practices offer a great start for any marketer looking to have their website perform better in the eyes of search engines. More importantly, they can help improve your ranking considerably.